ansible

|

Dec 28, 2017

In recent times I have been utilizing Ansible nearly constantly for a variety of automated deployments from Openstack, AWS and Azure.

Through this there have been a variety of different approaches I’ve run into on how to structure Ansible Playbooks. Some have unique playbooks for each different status change by having a playbook to start the enviornment and one to stop it. Others have a single extra_var that is passed in to determine the state of the environment and that handles starting and stopping the space. I’ve even seen the state of the enviornment be split out into different playbooks in different repos.

Continue reading »

VMware

|

Sep 25, 2015

My wife's laptop backup hard drive is starting to die. Now that hard drive is only about 5 years old, firewire external drive. So I think we've definitely gotten our use out of it. Needless to say she's a little concerned about loosing some of the data on it, so I told her to copy it over to the home file server that gets automatically backed up to the cloud. She proceeded to start copying the data over and since this hard drive is having a rough time, the GUI copy kept failing as some of the files are corrupted now.

So I took the hard drive down and directly connected it to my Ubuntu system. My drive came up as /dev/sdg. First step is to get hfsprogs installed

apt-get install hpfsprogrs

Once it is installed, to be safe I wanted to run fsck on the filesystem to give me the best chance possible to copy the data over. Next I ran

fdisk -l

[...]

Disk /dev/sdg doesn't contain a valid partition table

Now that doesn't surprise me since mac uses GPT by default and this drive is a little wonky. Instead I used GNU Parted

parted

(parted) select /dev/sdg

Using /dev/sdg

(parted) print

Model: Ext Hard Disk (scsi)

Disk /dev/sdg: 500GB

Sector size (logical/physical): 512B/512B

Partition Table: mac

Number Start End Size File system Name Flags

1 512B 32.8kB 32.3kB Apple

2 32.8kB 61.4kB 28.7kB Macintosh

3 61.4kB 90.1kB 28.7kB Macintosh

4 90.1kB 119kB 28.7kB Macintosh

5 119kB 147kB 28.7kB Macintosh

6 147kB 410kB 262kB Macintosh

7 410kB 672kB 262kB Macintosh

8 672kB 934kB 262kB Patch Partition

10 135MB 500GB 500GB hfs+ Untitled

Now I know that I'm looking to run fsck on partition 10.

fsck.hfsplus -f /dev/sdg10

Once that completed successfully, I mounted it with

mount -t hfsplus /dev/sdg /media/usbdrive

Finally a way to allow this to run a couple times and not just stop on the first failure due to Read Errors or IO errors I used

rsync -avE /media/usbdrive /media/backupsystem

Enjoy.. HFS+ support is very good in linux from my experience now. This copy took about 12 hours with many retries to get what I could off of it.

Continue reading »

VMware

|

Sep 17, 2015

An interesting change with App Store and Windows Update has made creating a VMware template a little more unique now. I attempted to create one doing the normal process of building from ISO, logging in, making the modifications we want to have done such as installing VMware Tools and then gracefully powering the system off. Then the Deploy from Template option and select a guest customization to Sysprep the system. That doesn't work as any Windows Store application such as the start menu or the Windows Store, simply doesn't start.

VMware says Windows 10 guest customization works just fine per the Guest OS instruction page.

http://partnerweb.vmware.com/GOSIG/Windows_10.html

This is a tad misleading as this set of instructions does not allow you to actually get SysPrep with a Guest Customization option to run correctly. To make SysPrep work with Windows 10, the Windows 10 system needs to be put into Audit mode.

The easiest way to enter audit mode when setting up a VMware template (at least a way it worked for my setup).

- Provision a blank system from DVD.

- When the system boots for the first time at the Welcome Screen/Customize Privacy settings, press + + .

Make the legacy configuration settings and setups (such as creating a local ID you want on the system using Computer Management or other fun legacy non-Windows Store apps).

- Do a graceful shutdown.

- Turn the system into a template

This worked very well for my setup. Any better ways to setup a clean Win10 template?

Continue reading »

Server Virtualization

|

Mar 16, 2015

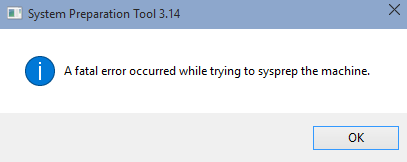

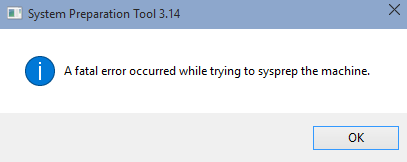

I've been working on getting Windows 10 available in our private cloud as blueprint and kept having the deploy fail when trying to sysprep it. When logging in directly to the system and running sysprep, we see this error.

When digging further we find in the C:\Windows\System32\Sysprep\Panther\setuperr.log this text.

SYSPRP Package Microsoft.Getstarted_1.5.22.1_x64_8wekyb3d8bbwe was installed for a user, but not provisioned

SYSPRP Failed to remove apps for the current user

This is caused by Windows App Store installing things while the template is online and connected to the network.

Resolution

Two solutions I've found so far

- When installing the OS, have it disconnected from the network.

- Clean up the template if that doesn't work by running the commands

get-appxpackage -name *packagename* | remove-appxpackage

Continue reading »

VMware

|

Nov 7, 2012

Jason Boche posted an ESXi Heap Size interesting discovery with regards to Monster VMs and the amount of storage one would be sticking to them. One of the large projects being designed today has the likely reason to hit this limit with just a single VM. This is pretty easy for that project to hit that as a single VM will have about 75TB assigned to it. Since this design has 4 or 5 VMs of this size, which then means our standard ESXi Host will have around 300+ TB assigned to it. Thankfully RDM isn’t impacted by this limit in theory, so there is a workaround available. Not ideal and yet doable.

Continue reading »